In this section, we sample and preprocess our Airline data, build a simple supervised model for predicting flight delays, evaluate its performance, and compare our findings with Iteration 1 of the Hortonworks case study.

"The joke is that Big Data is data that breaks Excel..." --

Brian Wilt, Senior Data Scientist, Jawbone (but see his full quote below).

In fact, this 120 million row dataset probably wouldn't break modern Excel with Power Pivot and its "spreadsheet data model", which essentially provides the power of SQL Server Analysis Services in-memory with Excel. But here we want to emphasize two key ideas: (i) Big Data in Apache Spark clusters can work well with Excel, especially if (ii) we simply use a good idea that's been around for decades, statistically representative sampling.

Over many years, statisticians and practitioners have applied machine learning to a representative sample of a large dataset, yielding results for decisions substantially as good as analysis of the entire dataset. CRISP-DM, the widely used Cross Industry Standard Process for Data Mining, embraced by SPSS and IBM, recommends sampling at the Data Preparation stage. SEMMA, the process developed by SAS Institute and used in SAS Enterprise Miner, stands for Sample, Explore, Modify, Model and Assess. As we'll see here, a representative sampling approach is well supported by theory, and effective in practice.

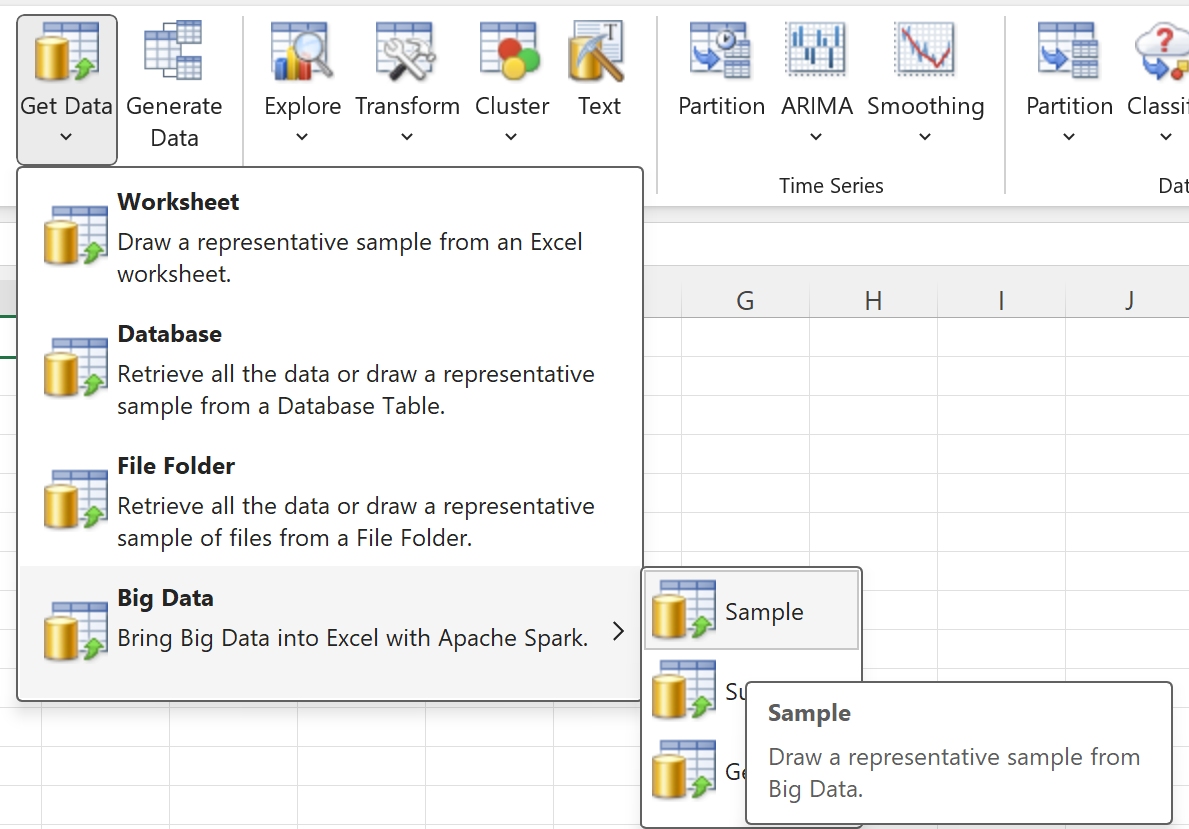

We'll start with a simple menu selection, Get Data - Big Data - Sample, to draw a random sample from the Airline data which has been preloaded on an Apache Spark cluster.

Analytic Solver Data Mining provides an intuitive, point-and-click user interface for sampling data from a Spark Big Data cluster. With a few clicks we infer the data schema, select required variables and run sampling with column projection against the Spark cluster. In under 30 seconds, we have approximately 100,000 records available in Excel on your desktop. It's that easy ... and as we'll see, this is plenty of data to build a predictive model.

Preprocessing Data

We immediately notice that many flights were canceled or diverted and that some records, especially ones that correspond to the earlier years (1980-90s), contain missing values. Aiming to discover the data patterns that can help us to predict flight delays, we'll choose to disregard such flights from our analysis. In the familiar rich Excel environment, this preprocessing task does not present any challenge. Since the 100,000-record data sample is formatted as an Excel table, you can easily filter out ‘Cancelled’ (1) and ‘Diverted’ (1) flights. Alternatively, you can use the more comprehensive Missing Data Handling facility available in Analytic Solver Data Mining.

As Hortonworks did, we want to train a binary classifier to identify "significantly delayed" flights. To do that, we'll create a new binary variable ‘DepDelay_Binary’, equal to 1 if the flight was delayed for more than 15 minutes (‘DepDelay’>=15), or 0 otherwise. This requires nothing more than an IF function in a new column in Excel.

The HortonWorks study focused on the year 2007, so we'll do something similar. Via simple point and click on the Year column of the data table, we'll filter out the flights from all the years except 2007 and 2008. Then we'll create an extra column ‘PartitioningVariable’, equal to:

T, if the flight was in 2007 (‘Year’=2007)

V, if the flight was in 2008 (‘Year’=2008)

We will use this column later, to select records from 2007 as a training partition, and records from 2008 as a validation partition when we assess the performance of the model.

Logistic Regression

In order to build a useful model, we realize that only information which is available ahead of time, when preventing a flight delay is still possible and advantageous, should be included in the model. To simplify the analysis, we use a combination of ordinal and continuous variables in the model. With the Data Transformation facility in Analytic Solver Data Mining, we could enrich the analysis by factorizing/encoding other categorical features available in the dataset.

As shown below, we train the model with 6096 flight records from 2007, and validate with 5777 flight records from 2008. The set of predictors contains time features (Year, Month, DayOfMonth), scheduled departure, arrival and elapsed times (CRSDepTime, CRSArrTime, CRSElapsedTime) along with the flight distance (Distance).

We also take into account the prior probability distribution of our output variable. The proportion of delayed flights to on-time flights is approximately 1:4, hence we adjust the classification cutoff probability for the class representing a positive (delay) condition. We also consider that the cost of missing a prediction of a delayed flight (false negative) is likely greater than the cost of an alert or preventive action on a flight predicted to be delayed with a high enough probability (false positive). Note that adjusting the classification probability threshold does not affect the model, only the class assignment, so in practice each alert could be examined individually, to assess the risk of the particular flight being delayed.

Running the logistic regression produces a model in a fraction of a second. The automatic predictor variable screening algorithm in Analytic Solver Data Mining has eliminated the intercept term from the model, training the model with the remaining eight predictors.

As shown in the Error Report above, our model's performance on the validation set (flights from 2008) is good but not spectacular. If we compare our results with Iteration 1 of the HortonWorks study, we have a model with essentially equivalent Recall (ratio of true positives, 0.64) and Accuracy (59%), even though we used less than 0.1% of the total data in the dataset. And the Lift Chart and ROC Curve for the validation set shows that our model does have non-trivial predictive power.

At this point, let's revisit the full quote from Brian Wilts, Senior Data Scientist at Jawbone, responding to the question "What is Big Data?":

"The joke is that big data is data that breaks Excel --

but we try not to be snooty about whether you

measure your data in MBs or PBs. Data is more

about your team and the results they can get."

We couldn't agree more. It IS about your team -- including your business analysts, as well as your data scientists -- and the results they can get. Our aim is to make life easier for the business analysts!

We aren't finished yet, though. The next step in a study like this is to think about the results, and ask new questions. We'll ask “Which of the eight features used in our model are really important?", and that will lead us to an almost embarrassingly simple model, and a common-sense conclusion.